How AI Shapes App Design in 2026

AI is now a cornerstone of app design in 2026, transforming how apps interact with users and achieve business goals. It powers personalization, conversational interfaces, predictive analytics, and automated solutions to enhance user experiences and drive engagement. Key AI technologies like machine learning, generative AI, predictive analytics, and conversational NLP are helping apps deliver smarter recommendations, dynamic content, and seamless interactions.

Here’s what AI is doing for app design today:

- Personalization: Apps tailor interfaces and recommendations based on user behavior.

- Automation: AI handles repetitive tasks like generating content or pre-filling forms.

- Predictive Features: Apps anticipate user needs, such as surfacing relevant info at the right time.

- Conversational Interfaces: Chatbots and voice assistants simplify navigation and support.

AI also streamlines the design process itself by assisting with wireframes, layouts, and prototypes. However, ethical design, data privacy, and compliance with U.S. regulations remain critical for building trust and avoiding legal issues. Success lies in starting with impactful AI features, refining them with user feedback, and ensuring transparency in how AI decisions are made. By focusing on these areas, app creators can deliver better experiences that meet user expectations while driving measurable results.

Matching AI Features to Your App Goals

Finding Where AI Can Help Most

Start by mapping out your user journeys to identify areas where users face challenges. Common friction points might include high dropout rates during onboarding, sluggish search results, or repetitive customer support queries. Once you’ve pinpointed these issues, ask yourself: “Can prediction, personalization, or automation address this problem?” For instance, if users struggle to find relevant content quickly, a personalized recommendation system could help. If support tickets frequently repeat the same questions, a chatbot might handle those inquiries efficiently. And if users abandon multi-step workflows, a predictive UI could simplify the process by pre-filling forms or suggesting the next logical action.

About 80% of consumers prefer personalized experiences, making recommendation systems a powerful tool for improving retention and engagement.

Take fitness apps as an example: predictive UI can adapt dashboards based on the time of day, offering quick workout suggestions around lunchtime or recovery tips in the evening to keep users engaged. Travel apps can streamline the experience by surfacing boarding passes automatically when users are near the airport. E-commerce platforms, on the other hand, can benefit from AI-powered search that understands user intent, ensuring a query like “red shoes size 9” delivers exactly what the user is looking for. Once you’ve identified potential AI opportunities, assess them using an impact-versus-feasibility framework.

Choosing Which AI Features to Build First

Focus on AI features that drive measurable results. Begin by assessing each feature’s potential impact and feasibility. Impact refers to how much the feature could improve metrics like user retention, revenue, task completion rates, or support efficiency. Feasibility depends on factors like data availability, the readiness of AI technology (such as pre-trained models), and any compliance risks tied to handling sensitive information.

Prioritize features that are both impactful and easy to implement, such as recommendation widgets or FAQ chatbots. More complex features – like medical diagnosis tools or dynamic pricing systems – require extensive data, stricter compliance measures, and longer development timelines. For example, a finance app might use a “next best offer” recommendation to increase revenue, but it must ensure compliance with anti-discrimination laws and fairness standards while leveraging sufficient transaction data. Once you’ve decided which features to prioritize, address any regulatory requirements early in the process.

U.S. Compliance Requirements

When designing AI features, it’s essential to meet U.S. regulatory standards to protect user data and maintain trust. Different sectors have specific rules, especially in health, finance, and education. Health apps must comply with HIPAA by implementing secure data storage and strict access controls. Finance apps need to follow GLBA regulations to ensure data protection and fairness. Education apps must adhere to FERPA and COPPA, particularly when handling data from minors.

Conduct compliance checks during the planning phase. If your AI feature involves sensitive data collection or analysis, make sure you have proper consent mechanisms, practice data minimization, and use U.S.-based storage solutions. For example, a personalized learning app should never share student data with third parties without explicit permission, and health apps must de-identify patient records before using them to train AI models. Additionally, clearly label AI-driven elements, explain how recommendations are made, and provide users with simple toggles to control these features.

10 AI Tools Every UI/UX Designer Needs in 2026!

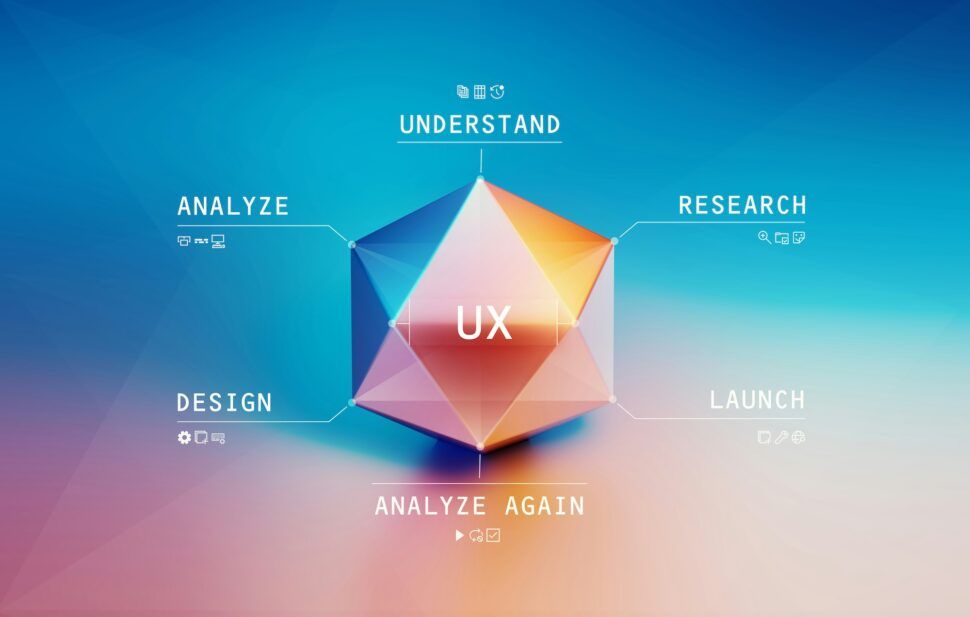

Designing User Interfaces for AI Features

Once you’ve identified impactful AI opportunities, the next step is crafting interfaces that are both intuitive and transparent, ensuring users can fully benefit from these advancements.

Common AI Design Patterns in 2026

In 2026, four key design patterns dominate AI-powered applications. Hyper-personalized interfaces adapt content and layouts based on user behavior. For instance, apps like YouTube Music now suggest tracks by analyzing lyrical similarities, rather than relying solely on genre or listening history. Conversational UIs have become smarter and more user-friendly, incorporating visual cues to guide users in areas like eCommerce and food delivery. Predictive prompts anticipate user needs through subtle micro-interactions – think eCommerce apps that suggest additional items for your cart based on browsing habits, using non-intrusive pop-ups. Finally, adaptive visual design includes features like auto-adjusting dark modes that respond to ambient lighting, rounded edges for a softer aesthetic, and transparent overlays paired with blurred backgrounds.

To implement these patterns effectively, tools like Adobe and Figma now offer AI-driven features that generate layout variations and branding concepts. This allows designers to focus on creative decisions while ensuring ethical data collection and running A/B tests to fine-tune algorithms. At the same time, maintaining familiar navigation elements helps reduce cognitive load, even as content personalization becomes more advanced. These adaptive approaches create seamless, user-friendly AI experiences.

Making AI Decisions Clear to Users

Transparency is a cornerstone of effective AI design, as it builds trust between users and the technology. One way to achieve this is by incorporating explainable AI prompts, such as “Why am I seeing this?” to clarify the reasoning behind recommendations. Micro-interactions, like animations that reveal data sources when tapped, can also make AI processes more understandable. Using familiar icons and gestures ensures clarity without overwhelming users with technical jargon.

A good example of this is Netflix, which breaks down its recommendation criteria by showing users how their watch history, ratings, or even the time of day influence suggestions. This type of transparency not only enhances user trust but also aligns with emerging U.S. regulations requiring clear disclosures about automated decision-making. To cater to different user preferences, make these explanations optional yet easy to find – some users may want to dive into the details, while others prefer a streamlined experience.

Designing for All Users

Voice interactions are becoming more critical, but they must be designed to accommodate diverse needs. Features like speech-to-text, clear visual cues, and adaptability for various accents and speaking speeds are essential. For hybrid use, combine voice with visual backups, adjustable response speeds, and haptic feedback. Keeping phrasing simple and including confirmation prompts can help minimize cognitive load.

Beyond voice, accessibility should be a priority across all AI features. Adjustable text sizes, simplified layouts, and seamless keyboard navigation are essential. Dark mode defaults and regular bias audits can further ensure inclusivity and align with user expectations. Testing AI features with users of different abilities and technical backgrounds is crucial to identify and address potential issues before launch. By focusing on inclusivity, you can create designs that work for everyone.

Managing Data, Privacy, and Ethics

AI can elevate your app’s capabilities, but it also brings serious responsibilities, particularly when it comes to data management and fairness. The Federal Trade Commission (FTC) prohibits the use of unlawfully collected data, with penalties that may include deleting both the data and the models trained on it. A 2024 IBM report revealed that 83% of organizations using AI encountered at least one data security incident tied to AI or machine learning within the past year. This underscores the importance of integrating privacy and ethical considerations into your app’s design from the very beginning.

Collecting and Using Data Properly

Start by identifying the data your app truly needs and avoid collecting unnecessary information. For example, if your app offers personalized recommendations, browsing history might be essential, but location data may not be – so don’t request it. Use clear and specific consent dialogs that explain exactly how the data will be used. Instead of vague phrases like “improve your experience”, opt for straightforward language such as “Use my activity to personalize recommendations”, paired with a simple yes/no option.

U.S. privacy laws differ by state, but the California Consumer Privacy Act (CCPA), along with its amendment, the California Privacy Rights Act (CPRA), sets a strong standard that many apps follow nationwide. These laws require clear notice of data collection, user consent for sensitive data like biometrics, and respect for user rights to access, delete, or opt out of data sales. Make sure to review and comply with all relevant U.S. privacy regulations.

When data is collected for AI purposes, provide clear notifications. For instance, if a fitness app uses workout history to offer AI-driven suggestions, it should prompt users for consent and include an easy opt-out option. Keep a record of all consents to ensure compliance, and implement robust security measures like encryption, role-based access, and regular security assessments. Once your data practices are solid, the next step is ensuring fairness in your AI models.

Preventing Bias in AI Features

With strict data practices in place, focus on fairness by auditing your AI models for bias. Studies from NIST show that biased training data can lead to accuracy drops of 20–30 percentage points for certain demographic groups compared to majority groups. To address this, review datasets to ensure diverse representation.

Test your models for fairness across demographic groups and work to close performance gaps larger than 5–10 percentage points. For high-stakes decisions – like those involving loans or hiring – use human-in-the-loop review processes. While AI can assist with recommendations, final decisions should be made by humans who have access to detailed explanations. For example, in 2023, Meta‘s audits of its ad delivery systems revealed significant biases in how housing and credit ads were shown, even when targeting was neutral. These findings led to algorithm changes and oversight by the Department of Justice.

Incorporate feedback mechanisms within your app, such as thumbs-up/down buttons next to AI outputs, to gather user input for retraining models. For conversational interfaces, include options like “Talk to a human” to build trust and improve user experience. Continuously monitor your AI’s live performance for any signs of drift or disparities, and retrain models with cleaner, more diverse data when needed.

Good vs Poor AI Design Practices

Ethical AI design boils down to key principles that differentiate effective practices from ineffective ones. The table below highlights these differences across critical areas:

| Area | Good Practice | Poor Practice |

|---|---|---|

| Consent | Clear, specific consent for AI use; easy opt-out options; no manipulative design | Bundled, unclear consent; pre-checked boxes; making opt-out difficult |

| Data Minimization | Collect only necessary data; anonymize or aggregate when possible | Collect excessive data "just in case"; keep data indefinitely |

| Transparency | Clearly label AI features and explain data usage | Hide AI use; rely on generic or overly legalistic privacy statements |

| Explainability | Offer simple, user-friendly explanations for AI outputs | Provide no explanations, leaving users in the dark |

| Bias Safeguards | Regular audits for bias; diverse training data; human oversight | Skip testing for bias; assume the model is unbiased |

| Error Handling | Provide fallback options and clear error messages; allow easy corrections | Fail silently; blame users for errors; offer no way to fix issues |

Following good practices not only strengthens user trust but also helps you avoid regulatory issues. Poor design – like showing “Suggested for you” without explaining why – can frustrate users and erode confidence. Similarly, inadequate error handling, such as a chatbot returning incorrect results without a way to recover, can lead to privacy risks if the wrong data is stored.

To make AI interactions more user-friendly, ensure accessibility features like screen-reader compatibility, captions for audio, high-contrast text, and options to disable certain adaptive behaviors. Include fallback paths that explain when AI is uncertain and allow users to adjust or correct outputs. These thoughtful design choices go a long way in creating responsible and effective AI systems.

Building and Improving AI Features

Start with a focused, impactful AI feature and refine it based on real-world feedback to ensure its success.

Launching Your First AI Feature

For your first AI feature, aim for something straightforward yet impactful. A great example is a contextual help tool, like a smart chatbot that assists users in real time. These AI-driven assistants use natural language processing to answer questions, explain features, and guide users through the app – all without needing massive amounts of training data. Other strong options include personalized content recommendations or enhanced search powered by natural-language understanding.

Set clear success metrics from the start. Track things like engagement, task completion rates, user adoption, conversion rates, revenue per user, and churn. Measure your AI’s performance by monitoring accuracy and response time. Use A/B testing to compare the AI-enhanced experience against a control group. Run these tests for two to four weeks, keeping an eye on metrics like session duration, retention, and user satisfaction. Research shows that apps leveraging AI personalization can achieve efficiency gains of 20–30%, making the results both measurable and meaningful.

Refining AI Features Over Time

Once your initial AI feature is live, focus on refining it through continuous iteration. Use the performance metrics you’ve already established, combined with new data, to guide your improvements. Collect quantitative data, such as usage patterns, drop-off rates, and prediction accuracy, alongside qualitative feedback from surveys, session replays, and in-app ratings. This will help you pinpoint issues like low accuracy or confusing UI elements. Regularly retrain your AI models with updated user data and adjust interfaces to address pain points. For instance, if your fitness app’s AI predictions start at 75% accuracy, incorporating user behavior data can push that accuracy to 92% over time.

Aim for prediction accuracy between 85–95%, user adoption rates above 70%, and robust error recovery mechanisms. Simple feedback tools – like thumbs-up/down buttons, “Was this helpful?” prompts, or options to “Talk to a human” – can provide valuable insights for further improvements. A/B testing remains crucial for experimenting with new model configurations, prompts, or UI designs. This iterative, data-driven process can lead to stronger user loyalty and satisfaction.

Working Across Teams

Refining AI features requires seamless collaboration across multiple teams. Success depends on the combined efforts of designers, developers, data scientists, and product owners. Designers craft intuitive interfaces and ensure the AI’s decision-making is easy to follow. Developers focus on integrating models and optimizing performance. Data scientists fine-tune algorithms, address bias, and monitor for drift, while product owners ensure the features align with business objectives and prioritize experimentation.

Establish clear workflows using shared tools and regular check-ins to keep everyone aligned. For example, when developing a voice interface, designers can prototype conversational flows, developers integrate NLP APIs, data scientists train models on user intent, and product owners validate the feature through user testing. Weekly standups and project management tools like Jira can help maintain alignment.

Visual Soldiers was a great partner for our design needs. Their fractional model gave us on demand access to a talented team without the overhead of hiring in house. The work was thoughtful, strategic, and always aligned with our goals. Jeff, Madison the entire team were creative, very responsive and made the experience truly collaborative. Would highly recommend!

COO at GoValidate

This collaborative approach ensures your AI features evolve alongside user needs, driving continuous improvement and long-term success.

AI isn’t just a feature — it’s a competitive advantage

Whether you’re launching your first AI feature or refining an existing product, we’ll help you map the right opportunities, design human-centered interfaces, and turn AI into measurable growth.

Book an AI UX Strategy CallConclusion

AI is transforming app design by offering personalized, predictive, and conversational experiences that put users first. Success in 2026 will depend on combining AI advancements with responsible practices, such as transparent recommendations, clear privacy settings, and proactive bias management. With 80% of consumers preferring companies that provide personalized experiences, the case for thoughtful AI integration is hard to ignore.

While AI can curate and streamline user interactions, human oversight is vital. AI features should reflect your brand’s values and enhance usability and trust. Start by evaluating your app for opportunities to incorporate AI – whether it’s through smart chatbots, tailored content feeds, or predictive search. Prioritize accessibility and ethical considerations from the outset to create a foundation for success.

The path to effective AI integration doesn’t stop at implementation. Regular refinement, guided by user feedback, ensures features remain impactful and aligned with user needs. Launch a focused AI feature, measure its performance, and adapt as necessary. Collaboration across design, development, and data science teams – discussed earlier – is essential to keep your AI tools effective, compliant, and in tune with your business goals.

For those looking to bring these strategies to life, working with experts can provide a significant edge. Visual Soldiers, an Atlanta-based agency, specializes in app design and custom development that incorporates the latest AI trends for 2026. Their flexible model connects cutting-edge AI capabilities with brand strategy and ethical design, without the need to build in-house teams. From creating AI-powered platforms like Airia to developing Curait‘s AI-Powered Sound Identity in September 2026, they’ve delivered tailored AI solutions that drive results.

The future of app design lies in merging AI’s predictive power with a commitment to user-first principles. By focusing on features that save time, minimize friction, and build trust, you can create apps that not only meet the expectations of 2026 but also earn lasting loyalty from your users.

FAQs

AI is transforming the way apps personalize experiences in 2026 by analyzing user behavior, preferences, and patterns as they happen. This enables apps to provide content, features, and recommendations that feel truly tailored to each individual.

With AI-powered insights, developers can design experiences that go beyond just meeting user expectations – they keep people engaged and satisfied over time. Whether it’s through adaptive interfaces or predictive suggestions, AI is making apps smarter, more intuitive, and better equipped to align with what users genuinely want.

When weaving AI into app design, three pillars should guide the process: user privacy, fairness, and transparency. Safeguarding personal data is non-negotiable – comply with U.S. privacy laws and implement strong security protocols to protect users’ information. At the same time, openly communicate how AI-driven features function. This not only fosters trust but also ensures users feel empowered and in control.

Another critical aspect is tackling bias in AI algorithms. Unchecked bias can lead to unintended discrimination, which undermines user trust. By being upfront about how AI decisions are made and offering clear, understandable explanations, designers can create apps that are not only ethical but also resonate with the expectations of users in 2026.

To meet U.S. regulations, app developers need to focus on data privacy and adhere to key laws such as the California Consumer Privacy Act (CCPA) and, when relevant, the General Data Protection Regulation (GDPR). This means building clear systems for obtaining user consent and being upfront about how data is collected and used.

Conducting regular compliance audits and keeping track of changes in federal and state AI policies can help developers avoid legal pitfalls. Beyond legal requirements, creating AI systems that prioritize fairness and accountability not only ensures compliance but also fosters trust among users.